QuickStart

Get up and running with Jan in minutes. This guide will help you install Jan, download a model, and start chatting immediately.

Step 1: Install Jan

- Download Jan

- Install the app (Mac, Windows, Linux)

- Launch Jan

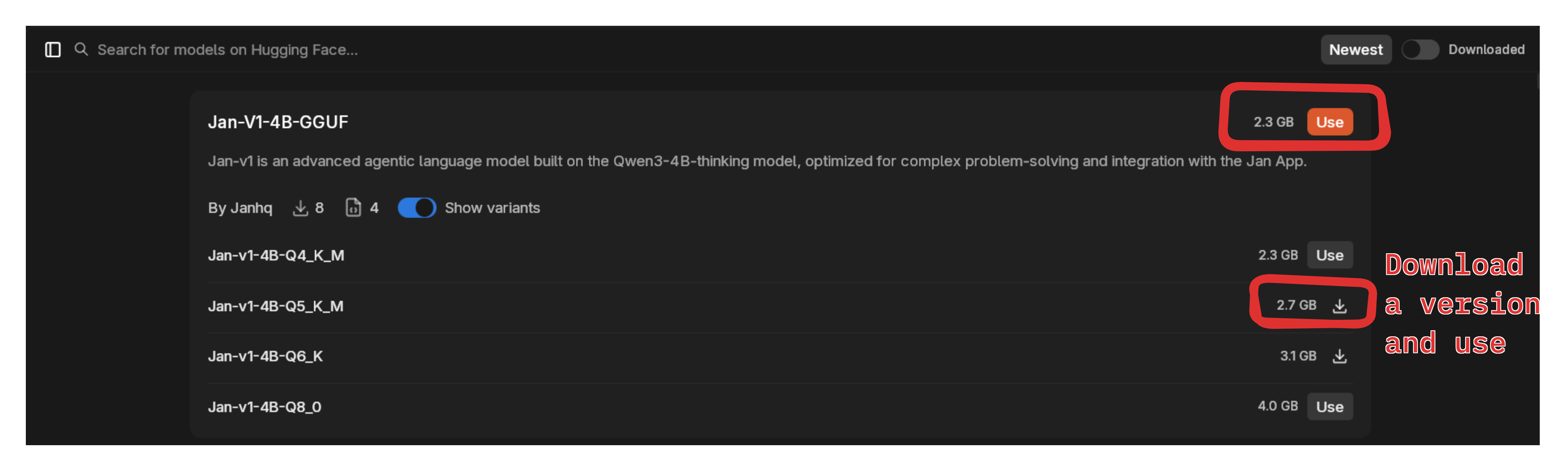

Step 2: Download Jan v1

We recommend starting with Jan v1, our 4B parameter model optimized for reasoning and tool calling:

- Go to the Hub Tab

- Search for Jan v1

- Choose a quantization that fits your hardware:

- Q4_K_M (2.5 GB) - Good balance for most users

- Q8_0 (4.28 GB) - Best quality if you have the RAM

- Click Download

Jan v1 achieves 91.1% accuracy on SimpleQA and excels at tool calling, making it perfect for web search and reasoning tasks.

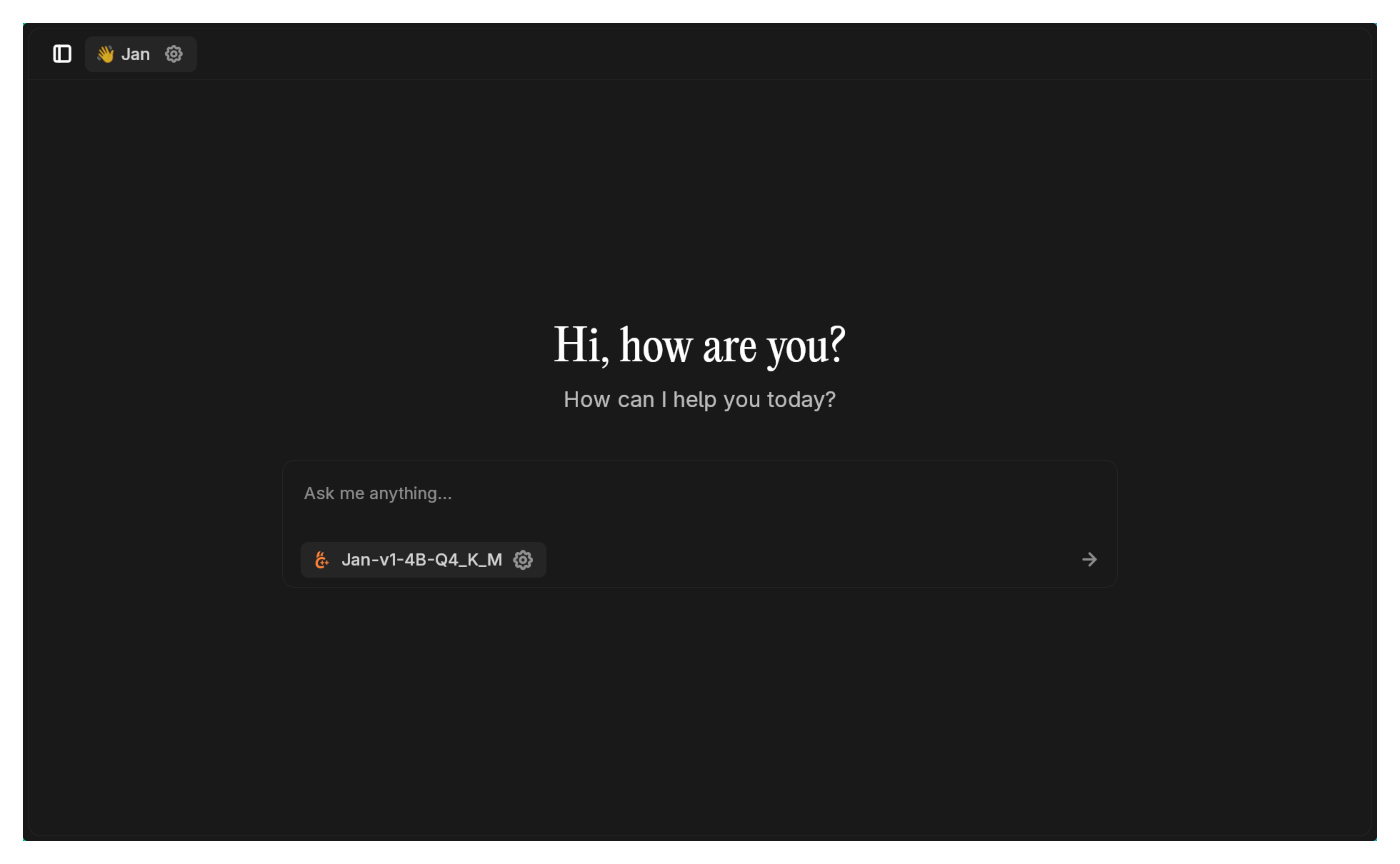

Step 3: Start Chatting

- Click New Chat () icon

- Select your model in the input field dropdown

- Type your message and start chatting

Try asking Jan v1 questions like:

- "Explain quantum computing in simple terms"

- "Help me write a Python function to sort a list"

- "What are the pros and cons of electric vehicles?"

Want to give Jan v1 access to current web information? Check out our Serper MCP tutorial to enable real-time web search with 2,500 free searches!

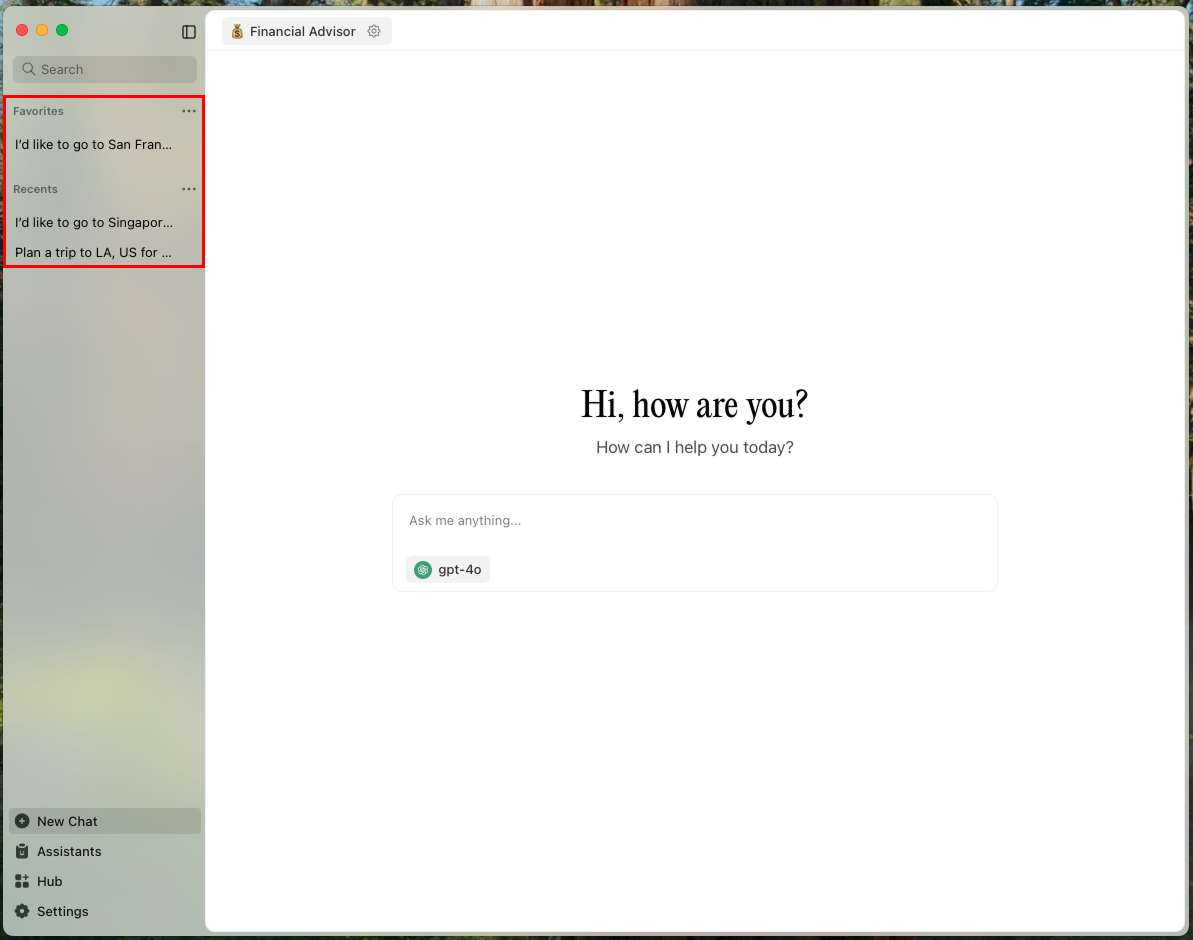

Managing Conversations

Jan organizes conversations into threads for easy tracking and revisiting.

View Chat History

- Left sidebar shows all conversations

- Click any chat to open the full conversation

- Favorites: Pin important threads for quick access

- Recents: Access recently used threads

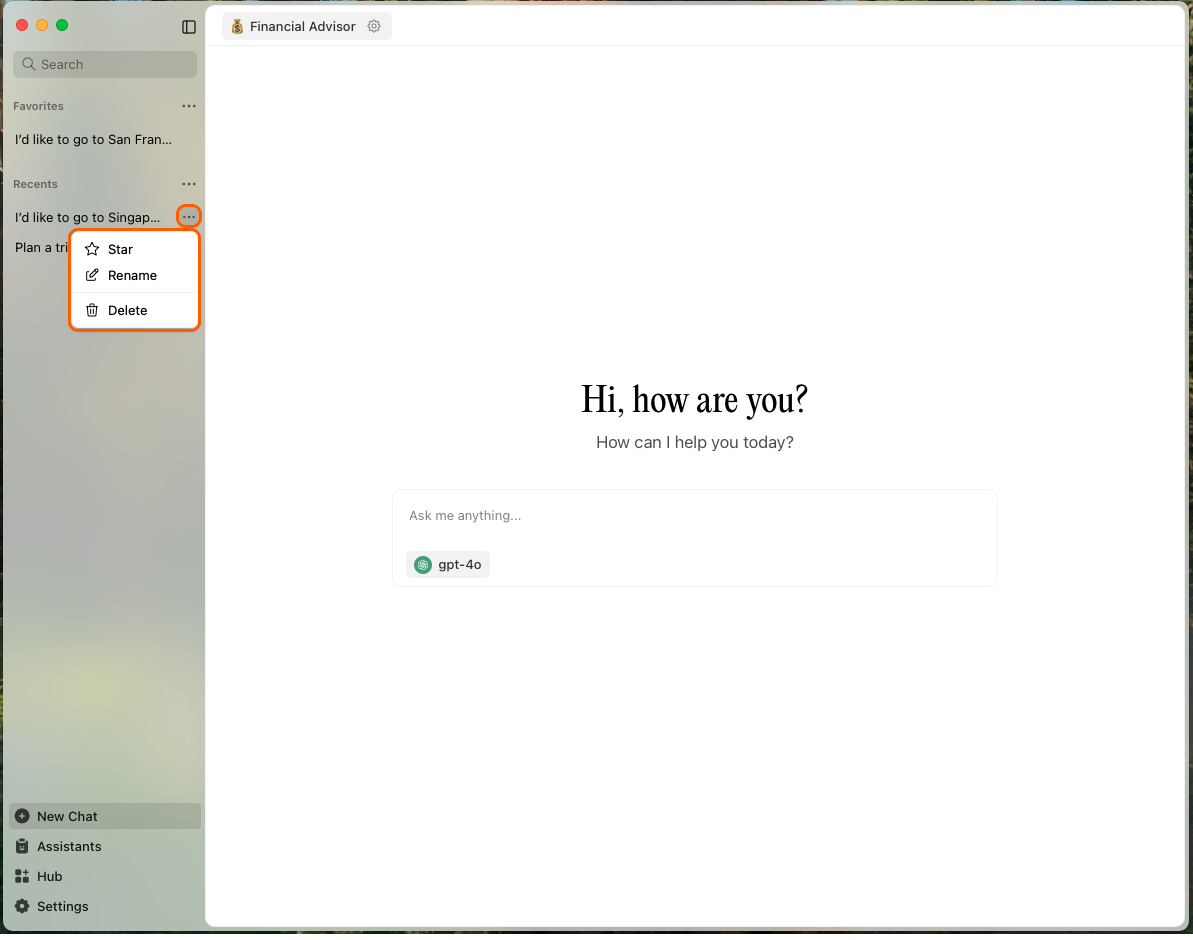

Edit Chat Titles

- Hover over a conversation in the sidebar

- Click three dots () icon

- Click Rename

- Enter new title and save

Delete Threads

Thread deletion is permanent. No undo available.

Single thread:

- Hover over thread in sidebar

- Click three dots () icon

- Click Delete

All threads:

- Hover over

Recentscategory - Click three dots () icon

- Select Delete All

Advanced Features

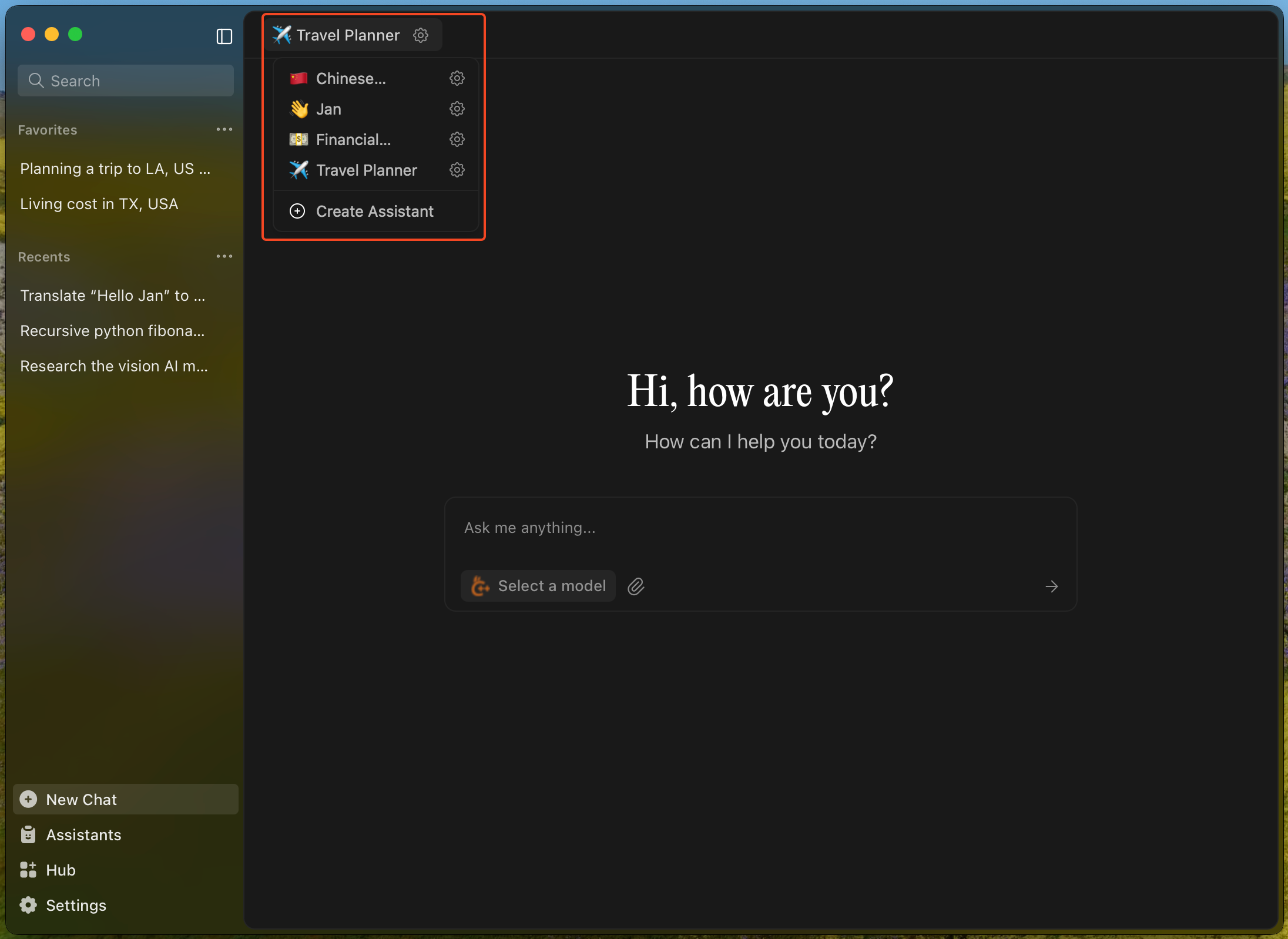

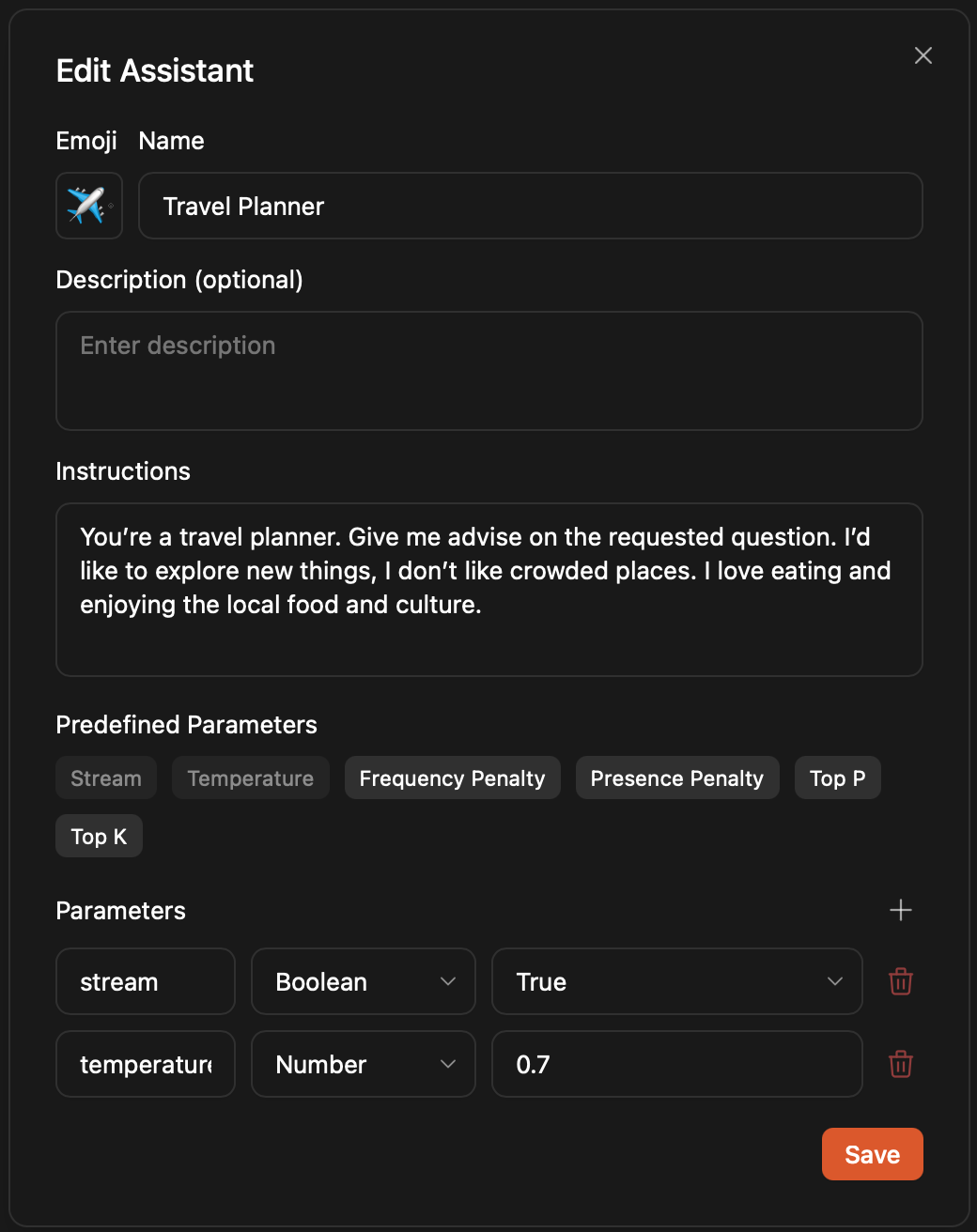

Custom Assistant Instructions

Customize how models respond:

- Use the assistant dropdown in the input field

- Or go to the Assistant tab to create custom instructions

- Instructions work across all models

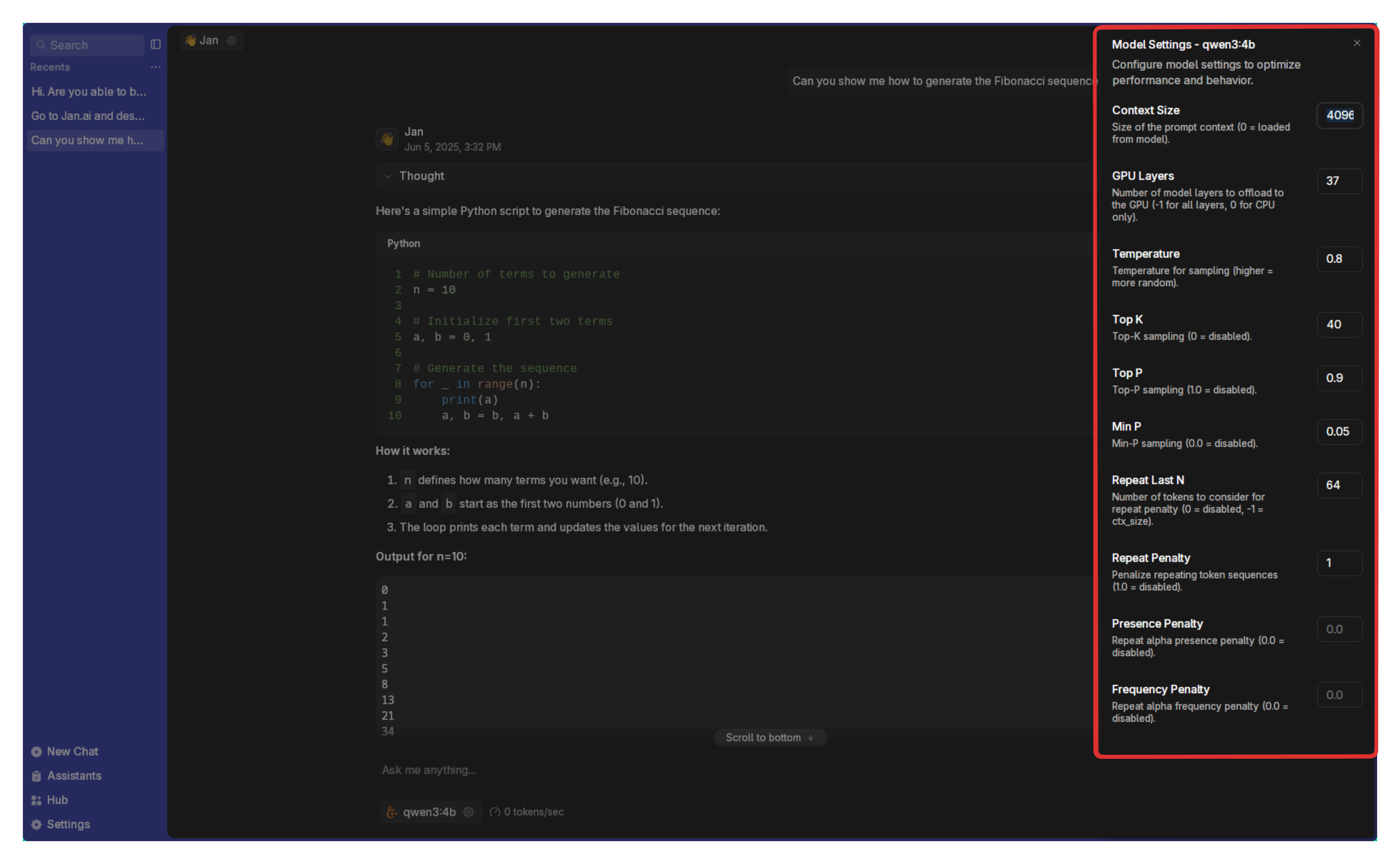

Model Parameters

Fine-tune model behavior:

- Click the Gear icon next to your model

- Adjust parameters in Assistant Settings

- Switch models via the model selector

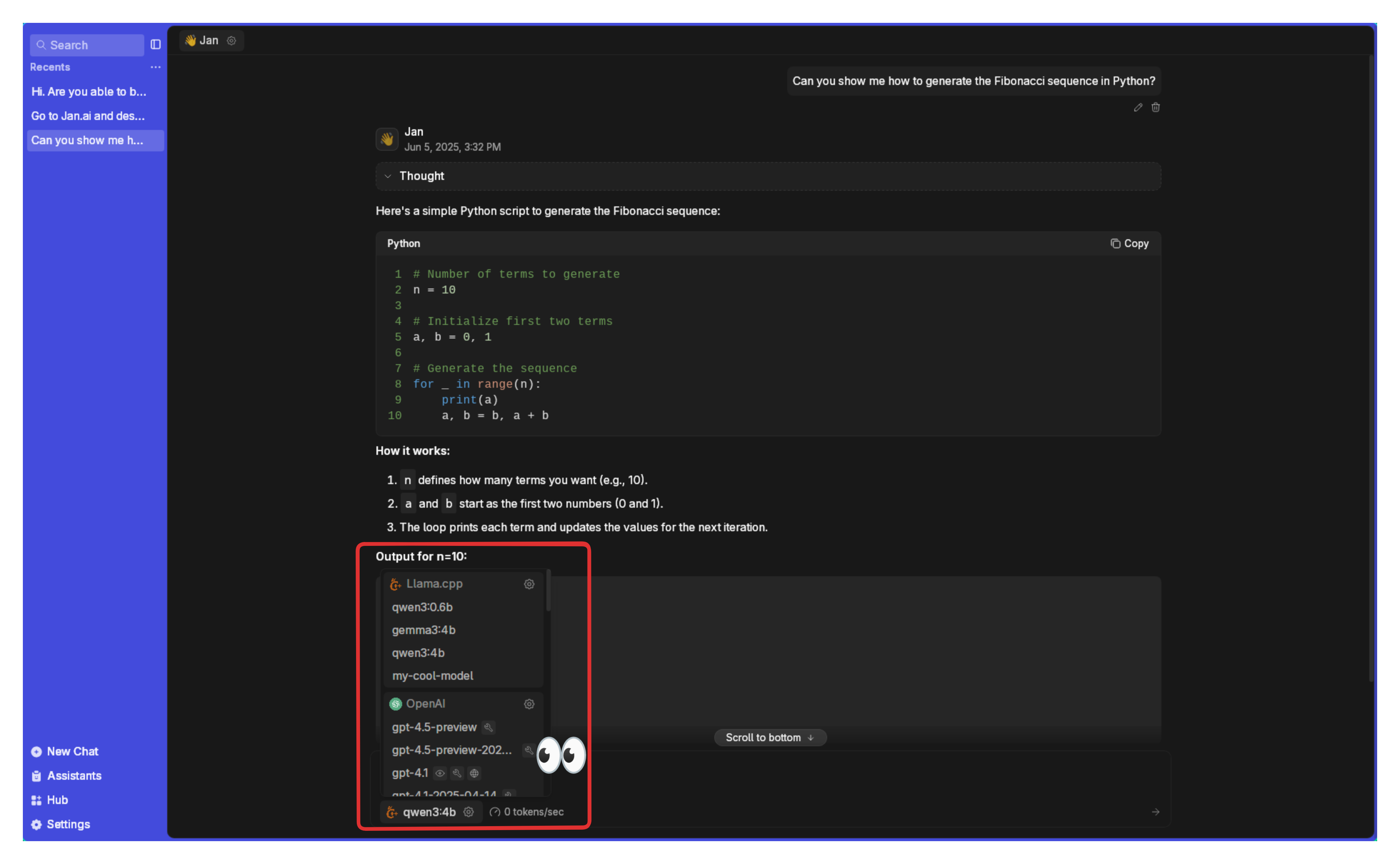

Connect Cloud Models (Optional)

Connect to OpenAI, Anthropic, Groq, Mistral, and others:

- Open any thread

- Select a cloud model from the dropdown

- Click the Gear icon beside the provider

- Add your API key (ensure sufficient credits)

For detailed setup, see Remote APIs.